When running an intranet or an otherwise password-protected site, you sometimes need to share confidential or sensitive files, specifically PDFs. It is a challenge to balance the confidential nature of the files while allowing privileged users to download and work with the files normally. One lightweight approach is to stamp a watermark onto each page of the PDF. Instead of just a big "confidential" watermark you can customize each download so that each file is stamped with, for example, the current user's name and the current date.

With the Online Daily Evaluations portal, student (or "trainee") doctors and attending doctors can easily evaluate each other after every hospital shift with a customizable online course evaluation form. This ensures that mandatory student evaluations are completed within a CanMEDS compliant and RIME compliant evaluation system, and that students get the most out of the learning experience. In such key environments as emergency medicine and anesthesia departments, doctors and patients can appreciate every bit of efficiency! Here is a brief walkthrough of how Online Daily Evaluations works.

The eZ Publish search extension eZ Find offers many sorting parameters for its search results, the most common being by relevance / score, and by date. On large sites, sorting by date can lead to non-relevant results showing up first. What if you want to sort by date and relevance, essentially boosting newer content but still giving you relevant results?

eZ Publish has had multi-language features (also called "internationalization") built into its core for over a decade. If you're considering building a multi-language eZ Publish website from scratch or to add additional languages to a single-language eZ Publish site, there are many technical details to be aware of. However, before you begin, it's important to develop a list of requirements for how end users and editors will use the languages on the site.

Some time ago I wrote a blog post about integrating Salesforce and Marketo in a web marketing solution powered by a content management system (in this case, the eZ Publish CMS). Recently, Mugo had the opportunity to migrate one of our clients from Marketo to HubSpot. The decision to move to HubSpot was made for non-technical reasons; regardless, it is useful to review the technical differences and challenges when it comes to integrating the marketing systems with a content management system.

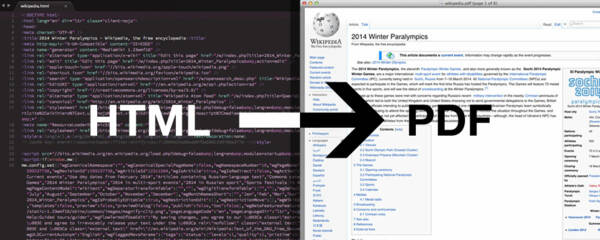

One of our customer websites sells research reports where all of the content is built and managed in the eZ Publish content management system. These reports are served via HTML through a gated website portal. They wanted to add a dynamic PDF report generation feature (based on content in the CMS); the PDF template was highly customized with nice layouts and styles, cover and back pages, custom page breaks, and much more. Over the years we've had good experiences with the ParadoxPDF extension. However due to its lack of HTML5 + CSS3 support and relatively high server load, we decided to look for an alternative solution. We found that wkhtmltopdf does a great job at producing highly styled PDFs, and we were able to integrate it nicely with eZ Publish.

Mugo Web and the Royal Columbian Hospital / Eagle Ridge Hospital emergency departments have created an online shift evaluation system now in use in 5 emergency departments across the region. The system is called Online Daily Evaluations and is being used at Royal Columbian Hospital, Eagle Ridge Hospital, Vancouver General Hospital, Victoria General Hospital, and Kelowna General Hospital.

Google Analytics is the most popular tool for understanding how people are finding and using your site. In addition to its standard reports, you can use its User ID feature to get more fine-grained reporting about registered users. This enables you to better measure, anticipate, and meet or exceed your users' needs.

The eZ Tags extension by Netgen is a great solution to the problems of managing large or ad hoc taxonomies. It especially solves problems around editorial user experience.

Recently, Mugo has added a bunch of improvements to the extension. This post talks about 2: allowing users to reorder tags by assigning priorities, and to select tags from a tree menu.

Having multiple projects stored in one Subversion repository is a challenge if you want to move one of the projects to another repository. Also, over time, moves and deletions can bloat the size of your repository with obsolete, unused data. In this article, we will show you how to extract SVN projects to their own repositories, preserving full commit histories.

Many weather sites offer embeddable widgets to display weather forecasts on your website. However, if you want to customize the look of the widget, your options are usually quite limited. Weather Underground far surpasses the rest of the options by providing a JSON-based API so that you can use their data to build and style your own widgets.

Sitemaps are an important element of search engine optimization (SEO), in order to provide search engines an accurate outline of what content exists on your site. One of our client sites recently outgrew Google's sitemap URL limit. Instead of removing content from the sitemap, we implemented a simple solution of using a sitemap index to reference multiple sitemap files.