Infrastructure as Code: provisioning and configuration management with Vagrant, Terraform, and Ansible

By: Mo Ismailzai | December 8, 2017 | Business solutions, Case study, Productivity tools, vagrant, ansible, terraform, infrastructure as code, and configuration management

Intended audience: technical managers, senior developers

Agile developers must constantly strike a balance between building solutions for a known existing case and building solutions that can scale to handle unknown future cases. On the one hand, Agile philosophy encourages us to build and iterate as necessary: Move Fast and Break Things. On the other, various programming best practices encourage us to build in an extensible and modular way from the start: Do One Thing and Do It Well. On smaller projects, these two goals can be achieved simultaneously; but on larger projects – especially given time and budget constraints – it is sometimes necessary to prioritize one over the other.

Project managers and full-stack developers face such choices almost immediately, during the initial development, staging, and deployment phases. For instance, a project may begin with a narrow scope and require only a single developer’s time. In this case, it often makes sense to forgo provisioning a dedicated development virtual machine (VM) or staging server, and instead, to use generic or shared environments. But as the scope of the project grows, for instance with caching or proxy layers, it often makes sense to implement better development, staging, and production parity.

The case

We recently went through this with a client whose site has experienced explosive growth over the past year, generating nearly 10 times the traffic it was experiencing just 12 months ago. This growth has not happened in a vacuum: FindaTopDoc.com has been producing quality content consistently, and aggressively developing new features. As their core technical partner, we’ve committed to providing elastic development capacity to help implement their vision; this means that we need to quickly ramp up or down on development in response to their needs. Further, given the increasing complexity of FindaTopDoc’s codebase, coupled with their ever-growing audience, we reached a point where we could no longer reliably test our work without mirroring the entire production stack on our local and staging servers.

Put together, this meant that we needed up-to-date replicas of production locally and on staging, and we needed an ongoing system to keep these environments in sync. Further, we needed to onboard new developers quickly so that tasking them with a few hours of work did not require us to absorb several hours of setup time. In short, it was time to add configuration management tools so that we could manage our Infrastructure as Code (more on these buzzwords later). We’ve been using configuration management tools for years and have worked with clients who have embraced Infrastructure as Code from the start -- Hootsuite, for instance -- as well as clients who have adopted it after launch. Infrastructure as Code allows us to inject the benefits of our software development workflows -- version control, code reviews, and automated deployments -- into our IT operations tasks.

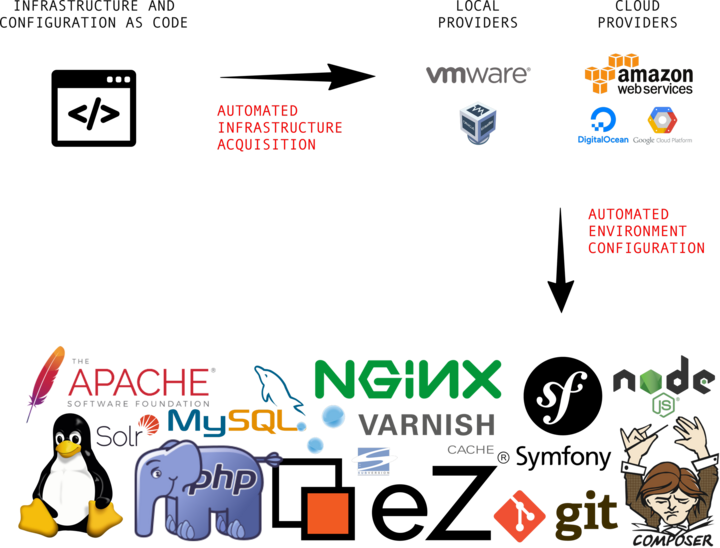

In a perfect world, this means a shift from the workflow depicted in Figure 1 to the workflow depicted in Figure 2. In Figure 1, every time there is a change to the development stack, each of the development, staging, and production environments must be manually updated. The discoloration in Figure 1’s software logos is meant to indicate the drift and version mismatch that often creeps in when these changes are manually configured. In Figure 2, configuration changes have to be described only once, and the actual work of implementing and deploying them across environments is automated.

Of course, we don’t live in a perfect world, nor do we always have the luxury of planning a project from its inception. And even when we do, the project’s scale, scope, and budget could necessitate a more manual approach. Adding a configuration management layer should not be taken lightly because it adds an additional layer to manage and maintain. In theory, this layer magically removes the headache of individual developers struggling to configure local environments and guarantees environmental parity; but in practice, it can stall progress. For instance, do we train all of our developers to interact with this layer or do we concentrate the expertise among select specialists? Training introduces delays and specialists can become bottlenecks. And even as teams learn to balance these concerns, configuration management bugs, incompatibilities, and maintenance will get in the way of day-to-day development. So the challenge is to make strides toward Figure 2 without stalling existing development, patches, and maintenance.

The plan

We reviewed many familiar options, including configuration management or software provisioning tools like Chef, Puppet, and Ansible, as well as infrastructure management or hardware provisioning tools like Terraform and Vagrant. Ultimately, we decided that the best fit for this project was a combination of Vagrant (for development infrastructure), Terraform (for cloud infrastructure), and Ansible (for configuration management).

Before moving forward, a few notes on the technical jargon. Over the past few years, there’s been a good and necessary push to bridge the worlds of software development and IT operations. This drive -- broadly called devops -- has focused on bringing consistency and increased communication between the work of creating software and the work of providing the necessary tools and systems for that software to be developed, staged, and deployed for end users. This is about the only thing anyone in the field agrees on, and everything from the definition of devops itself, to the actual ideals and best practices that the term entails, remains largely contested. It should not be surprising, then, that much of the devops jargon is also inconsistent, contested, and overlapping. Asking questions like “what’s the difference between a configuration management tool and a provisioning tool” will yield different answers based on who you’ve asked, and most of the tools themselves don’t fit neatly into any one category. This type of ambiguity is understandable, especially given that such phrases sometimes have different meanings in the development context than they do in the operations context. We’ll draw again on Figure 2 to help explain how we use and understand some of this terminology.

We call software that helps to automate infrastructure acquisition an infrastructure management or hardware provisioning tool. A provisioning tool is simply something that provides something else, so any software that helps us acquire new server hardware, be it real or virtual, local or in the cloud, fits into the infrastructure management category for us. We call software that helps to automate the configuration of server environments a configuration management or software provisioning tool. Any software that helps us to acquire or configure other software fits into the configuration management category for us. Of course, there’s a lot of overlap and many tools do a little bit of everything, so it’s not always black and white; but nonetheless, these general categories are useful for orienting the conversation.

And in fact, the reason we ultimately settled on Vagrant, Terraform, and Ansible is precisely because these tools are versatile and can be used for multiple purposes. Though Hashicorp markets Vagrant as a tool to create disposable development environments, it is fully capable of creating permanent staging and production environments as well. That being said, however, Terraform truly shines when it comes to cloud infrastructure. Unlike Vagrant, which uses Ruby configuration files, Terraform introduces a domain-specific language called Hashicorp Configuration Language (HCL), which is delightfully simple and readable. So we chose Vagrant to manage development infrastructure (VirtualBox) and Terraform to manage cloud infrastructure (Digital Ocean).

And we chose Ansible for configuration management. We continue to be impressed with Ansible’s simplicity, ease of use, and lack of dependencies (Ansible only requires Python and works via SSH, so target machines do not need to be running an Ansible daemon). Ansible is configured using YAML and domain-specific directives, which ultimately result in readable, reviewable, transparent code. And Ansible is supported by a large library of publicly maintained roles through a service called Ansible galaxy. Think of roles as reusable functions that can do anything from install and configure a package and its related services to pull the latest version of our software from our VCS and perform all of our release protocols.

Having decided on these platforms, what remained was to migrate our production configuration into Ansible and rebuild our environments using code. To keep the scope of this work manageable, we split it into three phases:

Phase I:

- Create a development virtual machine that’s a replica of production.

- Ensure that all required configuration and provisioning is described in code and use software to automatically apply that code to the development virtual machine.

Phase II:

- Drawing on the code and software in Phase I, automatically recreate the staging environment.

- Ensure that the development virtual machine and the staging environment are identically configured.

Phase III:

- Drawing on the code and tools in Phase I and II, automatically recreate the production environment.

- Ensure that the development virtual machine, the staging environment, and the production environment are identically configured.

- Alter existing development workflows such that configuration and provisioning changes must be made in code before being automatically applied to the development, staging, and production environments.

The implementation

Overview

We recently completed Phase I and the remainder of this post will be focused on our approach. Where appropriate, I’ve included code samples, but the aim here is to provide a conceptual overview rather than a tutorial.

Additional Requirements

To help ease our three development teams into the new workflow, we wanted to decouple provisioning from development and to avoid adding new processes or dependencies. And though we generally include production assets in our VMs, this time around we wanted them separated onto a secondary disk, which would shrink the core VM from 60 GB down to 2.5.

Implementation

Our Phase I implementation relies on Vagrant and Ansible and the remainder of this post assumes basic familiarity with both (if you’re new to these platforms and would like to follow along, it’s enough to read these short Vagrant and Ansible primers).

We adopted a two-pronged strategy: first, we would use Vagrant and Ansible to build a VM mirror of our production stack, then we’d use Vagrant to distribute and manage that VM. Since there is a wealth of great resources on basic Vagrant and Ansible usage, I’ll focus mostly on the trickier parts of our implementation.

To start, we organized the project based on the directory structure depicted below:

$ tree -a -L 2 . ├── Vagrantfile -> vagrant/Vagrantfile.BUILD.findatopdoc.local ├── ansible/ │ ├── files/ │ ├── findatopdoc.local.yml │ ├── host_vars/ │ ├── roles/ │ ├── roles_galaxy/ │ └── templates/ └── vagrant/ ├── Vagrantfile.BUILD.findatopdoc.local └── Vagrantfile.RUN.findatopdoc.local

The long-term vision is that as we progress to Phase II and III of our implementation, we’ll add additional playbooks in the ./ansible directory and a new ./terraform directory to handle cloud infrastructure. For now, the Vagrantfile.BUILD.findatopdoc.local and findatopdoc.local.yml files handle the creation and configuration of a VirtualBox VM that will be accessible at findatopdoc.local on the developer’s machine.

Here’s a deeper view of the project structure (I’ve truncated repetitive or non-informative directory listings to just ...):

$ tree -a -L 4 . ├── Vagrantfile -> vagrant/Vagrantfile.BUILD.findatopdoc.local ├── ansible │ ├── files │ │ ├── apache2 │ │ │ ├── ... │ │ └── dotfiles │ │ ├── ... │ ├── findatopdoc.local.yml │ ├── host_vars │ │ ├── findatopdoc.common.yml │ │ └── findatopdoc.local.yml │ ├── roles │ │ ├── common │ │ │ ├── defaults/ │ │ │ ├── files/ │ │ │ ├── handlers/ │ │ │ ├── tasks/ │ │ │ ├── templates/ │ │ │ └── vars/ │ │ ├── copy_file │ │ │ ├── ... │ │ ├── create_file │ │ │ ├── ... │ │ ├── create_user │ │ │ ├── ... │ │ ├── git_clone │ │ │ ├── ... │ │ ├── install_ezfind_solr_initd_service │ │ │ ├── ... │ │ └── rsync_pull │ │ └── ... │ ├── roles_galaxy │ │ ├── geerlingguy.apache │ │ │ ├── .gitignore │ │ │ ├── .travis.yml │ │ │ ├── LICENSE │ │ │ ├── README.md │ │ │ ├── defaults/ │ │ │ ├── handlers/ │ │ │ ├── meta/ │ │ │ ├── tasks/ │ │ │ ├── templates/ │ │ │ ├── tests/ │ │ │ └── vars/ │ │ ├── geerlingguy.composer │ │ │ ├── ... │ │ ├── geerlingguy.git │ │ │ ├── ... │ │ ├── geerlingguy.java │ │ │ ├── ... │ │ ├── geerlingguy.memcached │ │ │ ├── ... │ │ ├── geerlingguy.mysql │ │ │ ├── ... │ │ ├── geerlingguy.nfs │ │ │ ├── ... │ │ ├── geerlingguy.nginx │ │ │ ├── ... │ │ ├── geerlingguy.nodejs │ │ │ ├── ... │ │ ├── geerlingguy.php │ │ │ ├── ... │ │ ├── geerlingguy.php-memcached │ │ │ ├── ... │ │ ├── geerlingguy.php-mysql │ │ │ ├── ... │ │ ├── geerlingguy.php-xdebug │ │ │ ├── ... │ │ └── geerlingguy.varnish │ │ ├── ... │ └── templates │ ├── apache2 │ │ └── findatopdoc.conf.j2 │ ├── cron.d │ │ └── ezp_cronjobs.j2 │ ├── nginx │ │ └── findatopdoc.conf.j2 │ ├── php │ │ └── php.ini.j2 │ └── varnish │ └── default.vcl.j2 └── vagrant ├── Vagrantfile.BUILD.findatopdoc.local └── Vagrantfile.RUN.findatopdoc.local

As you can see, we’ve drawn heavily on publicly maintained roles from Ansible Galaxy (and specifically, on Jeff Geerling’s great work). Because we might want to update these roles in the future, we’ve centralized our customizations (which are in the form of variable definitions) in the ansible/host_vars directory. In general, Ansible variables defined higherup in the project tree override those defined lower down in the project tree, so the variables in ansible/host_vars will supersede the variables in ansible/roles_galaxy/geerlingguy.apache/defaults (this is also true for templates: ansible/templates/php/php.ini.j2 will supersede ansible/roles_galaxy/geerlingguy.php/templates/php.ini.j2).

We put variables that are common to all three environments (such as the project’s web root, for instance) in ansible/host_vars/findatopdoc.common.yml, and variables that are unique to the local development environment (such as the ServerName and ServerAlias entries for the Apache and NGINX configuration files) in ansible/host_vars/findatopdoc.local.yml. In Phase II and III, we’ll build on ansible/host_vars/findatopdoc.common.yml by adding staging.findatopdoc.com.yml and www.findatopdoc.com.yml to handle configuration details that are unique to the staging and production servers.

With our Ansible strategy and configuration in place, we just needed a Vagrantfile that would:

- Start with a Ubuntu 14.04.5 LTS 64 base image, which is what we’re running on production

- Create a secondary disk and attach it to the VM such that it’s accessible at /dev/sdb

- Run Ansible so it can configure the VM (which includes formatting /dev/sdb and eventually mounting it at our project’s ezpublish_legacy/var directory)

Since Vagrant allows us to directly interact with the VirtualBox API, creating and attaching the secondary disk was pretty simple:

config.vm.provider :virtualbox do |vb|

...

unless File.exist?("ezpublish_legacy_var.vmdk")

# create a secondary disk that will be used for the eZ Publish var mount

vb.customize ["createmedium", "--filename", "ezpublish_legacy_var.vmdk", "--variant", "Standard", "--format", "VMDK", "--size", 100 * 1024]

end

# (re)attach the eZ Publish var drive

vb.customize ["storageattach", :id, "--storagectl", "SATAController", "--hotpluggable", "on", "--port", 1, "--device", 0, "--type", "hdd", "--medium", "ezpublish_legacy_var.vmdk"]

...

endAnd tasking Vagrant to run Ansible against the VM is equally simple -- we just have to name ansible or ansible_local as the provisioner (ansible_local runs from within the VM so we don’t even need Ansible installed on the machine we’re running Vagrant on!):

config.vm.provision "ansible_local" do |ansible| ansible.verbose = "v" ansible.playbook = /vagrant/ansible/findatopdoc.local.yml ansible.galaxy_roles_path = /vagrant/ansible/roles_galaxy end

One ansible_local gotcha is that the playbook and galaxy_roles_path need to be accessible from within the VM. Luckily, Vagrant has a default behaviour that mounts the directory Vagrant is run from at /vagrant inside the VM -- so project files are accessible by prepending their relative path with /vagrant: (e.g. ./ansible/findatopdoc.local.yml becomes /vagrant/ansible/findatopdoc.local.yml).

And finally, in the Vagrantfile that ultimately loads the box we build and provision, we just need to attach the secondary disk created above:

# attach the eZ Publish var drive vb.customize ["storageattach", :id, "--storagectl", "SATAController", "--hotpluggable", "on", "--port", 1, "--device", 0, "--type", "hdd", "--medium", "ezpublish_legacy_var.vmdk"]

Of course, this assumes that we’ve distributed the secondary disk to our developers and that the file is present in the project root.

Moving forward

We’re pleased with the results of Phase I, which include a dramatic reduction in developer onboarding time and more reliable testing and development. Our long-term vision is to phase out all manual configuration and handle everything via Ansible. This would mean that a production change to php.ini, for instance, would occur on the developer’s local machine as an alteration to their Ansible Playbook, be committed to the project codebase and code reviewed/tested against staging, and finally, be deployed to production via Ansible. FindaTopDoc is still a ways from fully adopting the Figure 2 workflow but our Move Fast with Stable Infrastructure approach has meant that features and bugfixes continue to roll out on a weekly basis, traffic continues to grow, and the site continues to generate revenue. Stay tuned for Phase II!