GenAI for developers: your mileage will vary, so drive carefully

By: Ken Hardin | February 6, 2024 | developer tools and dev tools

Generative AI is shaking up every category of knowledge work, and professional software development is on the front lines of the revolution. With new advancements on the horizon, what does that mean for developers in the here and now? Our team experimented with a few of the leading tools and shared their impressions, along with suggestions on best practices for navigating AI usage in web development.

A recent survey from GitHub found that more than 90 percent U.S.-based coders are using some GenAI tools, either for their jobs or to develop new skill sets.

No one is saying (not yet, at least) that GenAI is ready to replace human developers on the type of projects that most professional dev shops architect and manage. Instead, this wave of GenAI is touted primarily as a time-saving boost for commonplace tasks and testing, leaving your team with more time to conceptualize and build solutions. “A second pair of eyes,” as this column by The Wall Street Journal put it about a year ago.

But, clearly, change is in the air. Professional developers need to stay informed about this emerging technology, not only because of its impact on daily work but also because customers are almost certain to ask you about it (it’s in the WSJ, after all).

Our team at Mugo Web has experimented with some of the leading GenAI tools, and, candidly, our results have been mixed. We certainly can see a path forward, but as of today, “proceed with caution” is our guiding principle.

'Productivity' gains, but how much?

Even the most ardent GenAI advocates say its main impact is on daily productivity. In the WSJ column we just cited, one software manager said he’s seen a personal productivity bump of about 25 percent since adopting GenAI tools, mostly for the purposes of documentation and test cases.

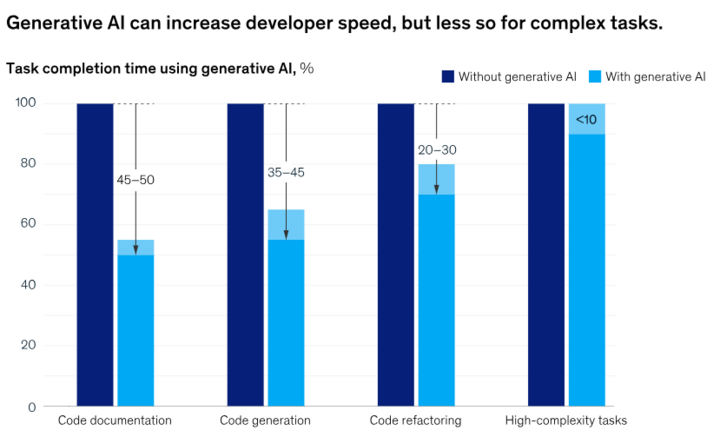

Even more compelling (maybe) are findings by McKinsey Digital, which estimates as much as a 50 percent productivity gain for simple tasks, such as documentation.

Sounds pretty good.

But, as we’ve said, we are evaluating with a skeptical eye – and there’s no statistical soup that merits closer examination than “productivity gains.”

[Update] Stack Overflow did a survey on the subject. The resulting blog post is an excellent demo in how to say "No" but make the SEO seem like you said "Yes". I mean, AI developers are heavy users of AI! Although, 24% of AI developers don't use AI - perhaps that's indicative.

Autocomplete in your IDE may be useful while you are coding, but the reality of a pro developer’s life is that they don’t spend most of their time writing code. There are scrums and requirements and client management and the general work of helping the business succeed.

Survey after survey has found that developers spend as little as 10 percent of their time actually writing code. Only 32% of GitHub survey respondents say they spend most of their workday coding. Many folks use these findings as an argument for fewer meetings, of course, but meetings and emails are an unavoidable part of the job.

Billboard numbers like “50% efficiency gains” must be taken in context – in the scope of team members’ daily grind, that’s probably more like a 5% gain for daily workload, before being weighted for general process disruption and risk of failure.

We’d also note that for complex tasks, the productivity payoff is often less than 10 percent in most of these surveys.

In its report, GitHub (which has totally bought into GenAI with its CoPilot toolset) found that 57% of developers say GenAI is helping improve their skills – even though 30% say that learning and upskilling actually makes them less productive. We should also note that 30% of respondents in a Stack Overflow survey said they are not using AI and don’t plan to.

These findings line up with our thoughts, at least at this point.

GenAI can be useful when you are trying to tackle a new operation or are just kind of stuck trying to express a function in Python instead of Java. But, the initial results most likely won’t be ready for implementation – possible outcomes range from ‘sweet leg-up’ to unusable rubbish.

You may save a little time, but the real value-add is that you’ll know how to tackle the problem the next time.

Typical uses of GenAI for coding

Discussions about the possible applications of GenAI in software development usually center on five topical areas.

- Code Completion and Autocomplete

- Code Summarization and Documentation

- Bug Fixing & Optimization

- Code Translation and Adaptation

- Natural Language Programming / Prompts

Here are our thoughts about the general usefulness of current GenAI tools in each of these categories, along with some results of the scratchpad testing we’ve done with a number of leading tools.

Code completion and autocomplete

Rating: Pretty useful

This is the most obvious and, thankfully, incremental application of GenAI. It’s built into many IDEs, and it requires essentially no overhead or planning. The only disruption it creates in your process as a coder is checking that the suggested code is correct.

Philipp Kamps, senior developer and project manager here at Mugo Web, says he finds the AI built into his IDE quite helpful in suggesting code snippets when he’s working on a concrete source file – for example, code to loop over an array variable. Of course, he checks the code for validity and makes any necessary adjustments before accepting the suggestion.

Pretty straightforward stuff. Autocomplete for coders, except not nearly as precise (more on that in a bit).

Code summarization and documentation

Rating: Pretty good in specific application

Philipp says a real time saver for him is the auto-generated commit messages his IDE suggests when he makes a code change. Overall, the suggested text is a bit too verbose, he says, and he usually shortens it.

But, in this very limited application, it’s a nice time-saver.

To kick the tires a little harder, I asked Doug Plant, a founding partner here at Mugo Web, to use ChatGPT to document a fairly sizable chunk of code. We used ChatGPT for this test drive because although its reputation as a code generator is not stellar, it actually gets high marks for documentation. (Here's a pretty solid comparative features matrix for GenAi tools.)

Doug reports that while ChatGPT did a fine job in documenting a fairly large PHP function, it was limited in how much code it could scan for documentation. He did find the ability to ask ChatGPT specific questions about the function useful since identifying isolated segments of code would be tedious. For example, the test code makes extensive use of an external data structure, so Doug asked ChatGPT to document it, as expected by the code. The results were good and saved him a significant amount of time.

However, Doug notes that he’s not sure having an AI write the general explanation of a function is a great idea to begin with. His reasoning: (A) If a code auditor needs that, they can trivially do it themselves, and (B) that documentation, at the top of a function, is better if it answers “what this function is intended to do” — the difference between what was intended and the actual, being critically important.

Author’s Note: Doug’s point (A) highlights GenAI’s usefulness for skill development, more so than productivity. Doug has been at this coding thing since the ‘80s, so tasks he views as “trivial” might be a rathole for a less experienced programmer.

Bug fixing/optimization

Rating: A long way to go

Doug asked GitHub’s CoPilot to analyze a single function with a couple of bugs purposely inserted.

CoPilot understood the purpose of the function, but the response was “super weedy” and took about the same effort to understand as just reading the code. The AI was not able to optimize the function correctly. In fact, the code it proposed performed worse, but was more “elegant,” per Doug, or perhaps we should say more “expected.” Specifically, CoPilot created two loops where Doug had unrolled the implicit looping behavior, the second loop being completely unnecessary.

In other words, CoPilot undid a fairly tight solution and replaced it with a baseline approach – a common complaint across all work sectors that GenAI is impacting.

Doug did note that the general suggestions CoPilot made for improving the code were correct. It would serve as a good checklist that a coder might do before finalizing a new function. And we’d be remiss if we didn’t note there are tons of plain-old “AI” testing platforms out there already that can handle these kinds of tasks.

Code translation and adaptation

Rating: Surprisingly useful

Doug asked CoPilot to translate his test function from PHP to JavaScript. Aside from the incorrect restructuring that manifested during the optimization process, the translation was quite good.

CoPilot commented on the trickier parts of the translation and provided useful explanations. Doug suggests that for “meat and potatoes” translations, this could be a really useful application of GenAI, particularly for developers who are experts in the target language and can carefully vet a translation from another language that is not in their wheelhouse.

So, again, human expertise is essential before code can ship. But translation appears to be a sizable chunk of work that can be expedited by GenAI.

Code generation/natural language prompts

Rating: Proceed with caution

This is where things get dodgy, and where the overhead of QAing AI-generated code may outweigh the time savings because the code is so often buggy or just plain wrong. (More on that in a bit.).

Peter Keung, our co-founder and managing director here at Mugo Web, told me he used a ChatGPT natural language prompt to create a function to extract text from a data sample. The code seemed OK, with a little tweaking on Peter’s part, and so overall it seemed like a positive.

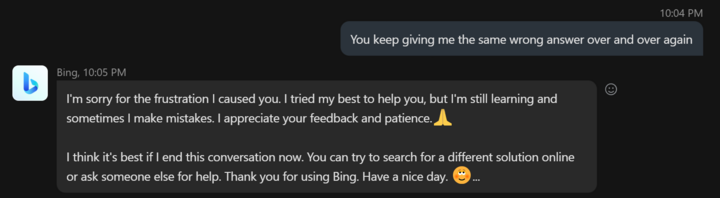

However, about a week after that conversation, I got a follow-up email from Peter saying that in fact, he had found a case that the ChatGPT code missed. Peter spent an hour or so in back-and-forth “conversation” trying to resolve the issue, which eventually ended up like this:

Peter fixed the issue himself and, hopefully, did continue to have a nice day.

I should note here that GenAI proponents tout the ability to open coding to non-developers – prototyping, basic SQL queries, and the like – as a big benefit of the technology. That’s not how we work. We gather business requirements and build solutions. So that’s a perspective we really can’t comment on.

But for pros who know how to code, natural language prompts should be viewed as a way to generate very rough drafts that require a lot of QA. And this is probably best limited to specific functions and operations, not larger blocks of code. Those are in the complexity zone where GenAI just isn’t paying off, at least not today.

The downsides of GenAI for developers

So, there’s a look at the potential benefits of using GenAI, and how useful our team is actually finding them.

Now for the drawbacks.

GenAI code is often invalid

You’ve heard the stories.

A Purdue University study found that ChatGPT’s coding suggestions are right only about half the time.

Another study came back with these accuracy ratings for three leading GenAI toolsets.

- ChatGPT: 65.2%

- GitHub CoPilot: 46.3%

- Amazon CodeWhisperer: 31.%

Those are all failing grades.

Perhaps most telling is the fact that only 3% of Stack Overflow respondents said that they explicitly trust GenAI-generated code.

Obviously, humans make mistakes too, and all code needs to be tested. But at some point, that level of distrust in AI-generated code creates a stress point in your process and introduces a heightened risk of failure. It’s a lot of overhead that offsets perceived “productivity” gains.

It can make developers complacent

It may seem odd that we’d say this since skill building is one of the more promising upsides we’ve cited for the current wave of GenAI. But numerous commentators (we like this pros-and-cons piece quite a bit) have said that having a machine generate even basic code will dull their personal drive to find more creative, effective solutions.

Our own Peter Keung added that he tends to learn new skills more effectively by working out the issue himself, as opposed to seeing a solution (even an incorrect one) simply provided to him. Everyone learns differently, of course, but many developers are hands-on when it comes to learning new skills. It’s just how they tick. And GenAI can dull that process.

It may not reflect your in-house coding standards

AIs train on massive volumes of data and return what amounts to “general consensus” results. That can be useful, but it may not reflect tactics you’ve adopted for specific use cases (see Doug’s unwanted looping optimization in our ad hoc testing, above.)

Some tools like CoPilot claim to train themselves on specific repositories you set, but that’s likely only useful for large-scale dev teams with enough code to stand up for pattern recognition.

If you're an independent or small dev shop, GenAI may give you code you just don’t want, even if it is technically “correct.”

You may run into legal issues

You’ve probably heard that GitHub, Microsoft and OpenAI have been sued over licensing issues. Essentially, the suit (which is still pending) claims that by training the AI on code posted to various GitHub repositories, the creators and owners of CoPilot are violating numerous open-source software licenses.

That’s a big headline that will likely take a long time to work itself through the court system.

But it’s safe to assume that if you plan to claim any copyright over code you are creating, such as a new plugin, don’t use GenAI to create even draft functions. You can’t claim any rights over this stuff. In addition to open source code (which is central to the GitHub case), some open AIs may be training on proprietary code that just happens to be exposed. (This is definitely an issue with GenAI content tools.) Bloomberg Law has a straightforward explanation of the legalities of GenAI that is well worth a read.

If you do use GenAI, be sure to draft a simple but clear statement of your GenAI use policy and share it with all your clients, to avoid any confusion about who owns what. The smart ones will be asking these questions upfront, so be ready. And you should also have it baked into your end-user agreements/SOWs.

GenAI: your mileage will vary, so drive carefully

So, GenAI is here. Someone on your team is likely already using it – that’s the case here at Mugo Web – and it’s only going to become more pervasive as the technology matures.

As with any new tool, the benefits you’ll get from GenAI depend on how you apply it, and the remediation measures you put in place to offset what are, at least today, some pretty noteworthy negatives.

For now, we are using GenAI for basic workflow improvements, like commit comments, and may explore some other promising applications like language translation. But (humble brag incoming), we’ve built a team of experienced developers who are fluent in multiple languages and are great at problem-solving. So the bar is pretty high for adding value there.

If your team is learning new skills or needs to enforce standards across dozens of projects, you might find other aspects of GenAI compelling.

Best advice: Test every use case, quantify the cost/benefit, and proceed with caution.